By Alvaro Orsi, ESR data science lead

Artificial intelligence (AI) is rapidly transforming our world. While the potential benefits of AI are immense, many organisations are hesitant to fully embrace the technology due to concerns about its risks and unintended consequences. However, by taking a proactive and responsible approach to AI adoption, we can harness its power to drive innovation and efficiency while mitigating potential downsides.

The ethics of AI

Discussions about AI ethics and responsible development are not new. Researchers, philosophers and computer scientists have been studying and debating these issues for decades.

In his famous 1942 short story Runaround, Isaac Asimov introduced the ‘Three Laws of Robotics’ as a set of fundamental safety rules to govern the behaviour of intelligent machines. While used as a literary device, Asimov's Three Laws highlighted key ethical considerations around ensuring advanced AI systems remain under meaningful human control and have robust safeguards to prevent them from causing unintended harm.

Asimov's work kicked off decades of debate and analysis around advanced AI systems and human values and interests.

Today, well-established frameworks and guidelines exist to help organisations develop AI systems in an ethical manner. At ESR our data science work follows an internal Responsible AI framework as well as the Algorithm Charter for New Zealand to ensure we build AI that is transparent, fair and aligned with human values. These frameworks cover key ethical principles such as transparency, explainability, fairness, non-discrimination, privacy and data protection. By instituting strong governance and emphasising these principles, we can proactively manage the risks of AI while realising its benefits.

The risk of not using AI

While the risks of AI should not be ignored, it is time we realise that risk we face of not adopting AI at all. AI has the potential to help solve major societal challenges –from improving healthcare access and outcomes, to enhancing food safety and reducing inequities. By choosing not to leverage AI, or moving too slowly, we risk perpetuating the status quo and falling behind as the technology rapidly advances elsewhere.

This is especially critical in the agriculture sector. The United Nations Food and Agriculture Organization (FAO) projects that with current agricultural practices, the world risks running out of food by 2050 due to population growth coupled with inadequate use of land [1]. Climate change poses additional threats to food production systems. In this context, it is imperative that we harness advanced technologies like AI to explore alternative agriculture practices and secure an adequate food supply for all. With global food demand rising and natural resources under strain, maintaining the status quo in farming is simply not an option. AI will be essential for doing more with less.

The COVID-19 pandemic has served as another stark reminder of the critical importance of leveraging artificial intelligence (AI) tools for pandemic preparedness and response.

Consider the deep learning tool currently developed by ESR to forecast COVID-19 hospitalisations. This AI system can predict the number of hospitalisations with remarkable accuracy, allowing health officials to anticipate surges and allocate resources accordingly. Given the global impact of the pandemic in terms of healthcare systems being overwhelmed, and staff and equipment stretched to the breaking point, developing technology to support resource allocation and anticipate outbreaks is critical.

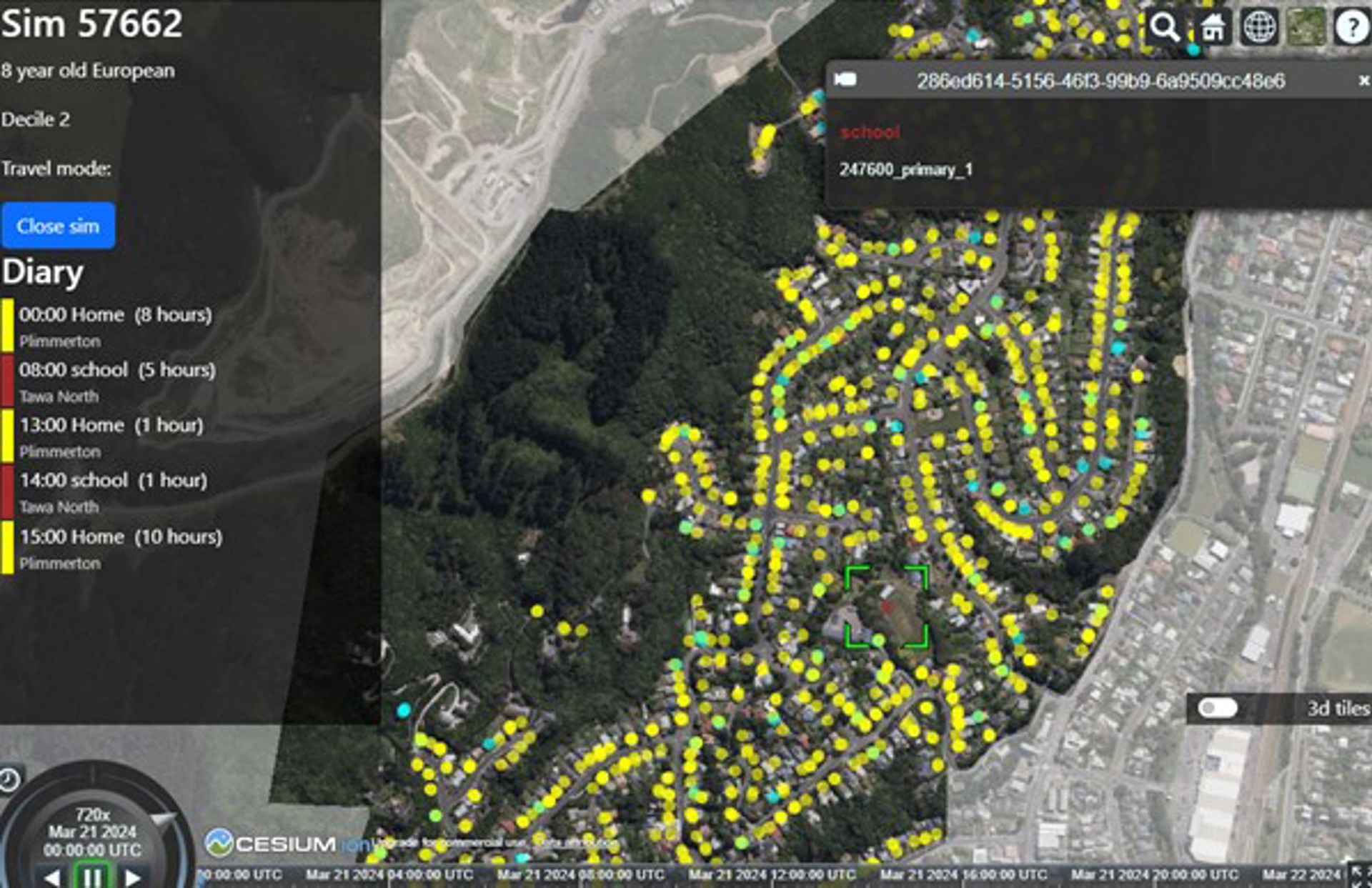

The potential of AI extends far beyond forecasting. ESR has developed a Digital Twin platform (see figure 1) integrating cutting-edge AI-powered agent-based models. This tool allows us to simulate the behaviour and interactions of millions of synthetic agents (i.e. simulated humans) as they navigate their lives in a virtual city. By running various scenarios like adjusting the number of individuals getting vaccinated, or imposing a lockdown on schools, we can study the potential risks and impacts of disease outbreaks, identify vulnerable communities, and develop targeted intervention strategies, without needing to play out those scenarios in real life, risking the lives of real humans.

The consequences of not embracing AI in pandemic preparedness and response efforts are too grave to ignore. Without these cutting-edge tools, we risk missing early warning signals, failing to anticipate disease dynamics, misallocating scarce resources, and ultimately perpetuating health inequities across our communities. In a world where every second and every dollar counts, can we afford to be caught off-guard, scrambling to respond to a crisis that could have been mitigated or even prevented?

Figure 1: A screenshot of our digital twin platform showing the location of individuals and the travel diary of a single virtual agent.

What's more, if we don't invest in developing our own AI capabilities, we may end up forced to adopt AI systems built overseas that don't properly account for our unique cultural context and values. Should we trust that tech giants from overseas will address Māori data sovereignty adequately when deploying AI systems for New Zealand?

It's crucial that we take an active role in shaping the future of AI. By becoming developers of the technology, not just consumers, we can ensure it aligns with our societal priorities and ethical principles.

This doesn't mean we should adopt AI carelessly or ignore the risks. But it does mean the risk of inaction is real. We must proactively engage with AI while embedding the right safeguards and governance structures in the development cycle of the technology. Retreating from the AI revolution is not an option if we want to thrive in an AI-powered future.

Powerful technologies have unintended consequences

As with any transformative new technology, the full implications of AI are impossible to predict. Let’s explore a few examples.

One of the first encounters of human civilisation with an advanced AI system occurred when online recommendation algorithms were first deployed at scale. Ecommerce companies and social media sites introduced these systems to improve the user experience by promoting content tailored to the user. In the early days of this technology, few anticipated that they would eventually contribute to the polarization of political thought, inflammatory speech and the spread of misinformation.

The story of AlphaGo, the AI that defeated the world champion at the complex game of Go in 2016, provides another instructive example. AlphaGo was a ground-breaking AI system developed by Google's DeepMind to master the ancient Chinese game of Go [2]. AlphaGo initially trained on expert human games before playing millions of games against itself, continually improving through trial-and-error. In 2016, AlphaGo famously defeated Lee Sedol, one of the world's top Go players, making history as the first time a computer program had beaten an elite human at the full game of Go.

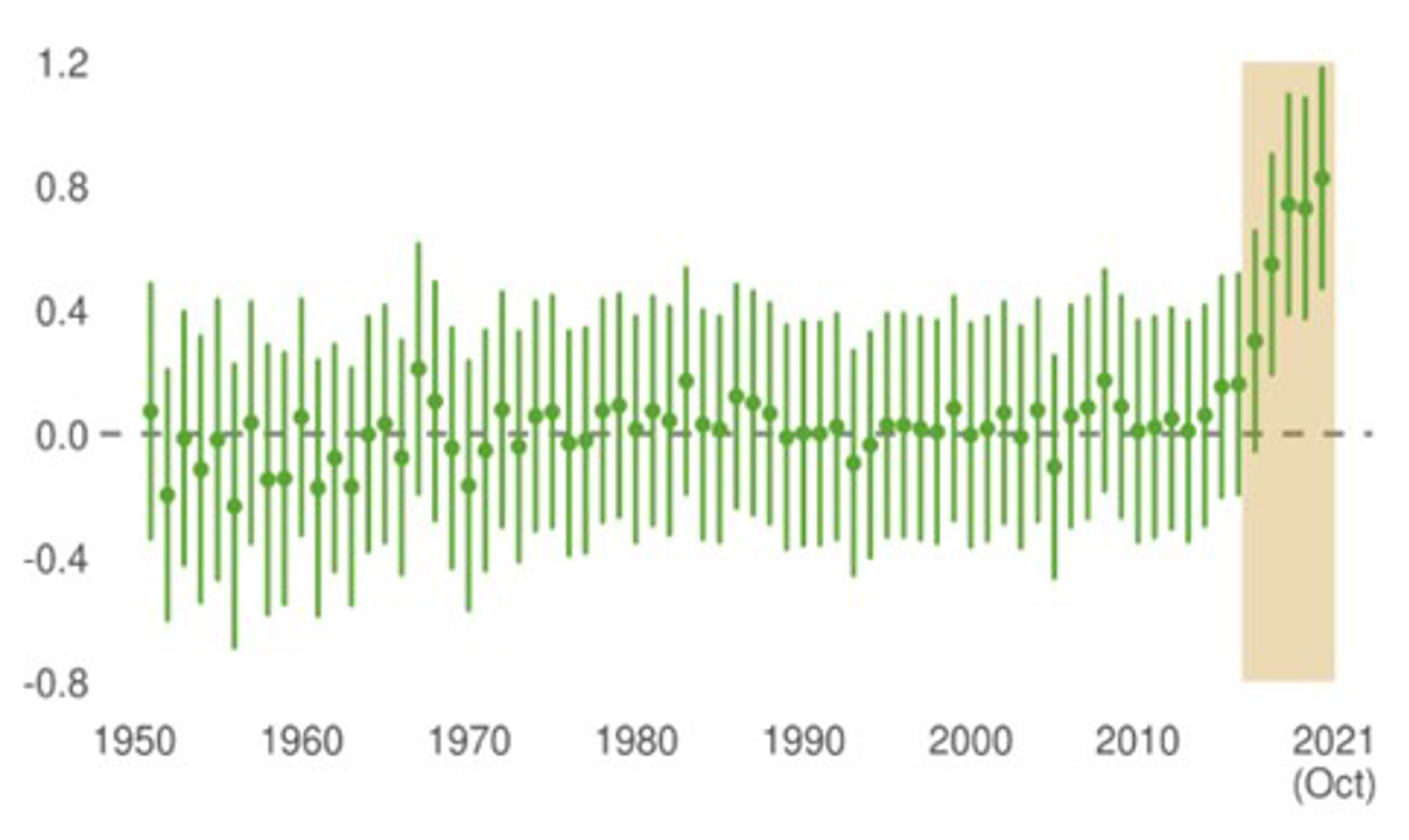

A very interesting outcome is that in the years since, human Go players have actually improved their skills by learning from and practising against AI (see Figure 2). Go players have discovered completely new ways of developing successful strategies, leading to an AI-powered revolution in the knowledge about the game.

Magnus Carlsen, the current world chess champion, is another example of learning from AI. Carlsen has leveraged AI tools throughout his career to take his game to new heights. He is now arguably considered the greatest chess player of all time. Would Carlsen have achieved the same level of mastery without AI? It's impossible to know for sure. What is clear is that human-AI collaboration has transformed the world of chess.

In the same way, the rise of large language models and other generative AI tools will likely have far-reaching impacts on how we work and create. Some of these impacts will be positive, like enhanced productivity or the potential to accelerate scientific discovery [4] while others may be more disruptive or challenging to navigate. The key is to remain adaptable and proactively address issues as they emerge.

Figure 2. Decision quality of professional Go players as evaluated by an algorithm performing at superhuman level. Decision quality significantly increased after Lee Sedol was beaten by AlphaGo on March 15, 2016 (shaded area).Taken from Brinkmann et al. (2023) [3]

A responsible path forward

Adopting AI is not without risks, but it would be a mistake to let fear hold us back. To fully harness the immense positive potential of AI while avoiding the worst pitfalls, we must proactively implement robust safeguards and governance frameworks. This includes embedding transparency and fairness into AI systems from the ground up, conducting rigorous security testing before deployment, and establishing clear accountability measures. Additionally, facilitating open public dialogue and investing in research on AI's societal risks, such as bias mitigation and privacy protection, is crucial. By taking a comprehensive approach to AI risk management, encompassing technical, legal, and ethical considerations, we can navigate the challenges and reap the transformative benefits of this powerful technology responsibly and safely.

There is also a huge opportunity for us to become active players in shaping the future of AI. By investing in AI capabilities, we can carve out a niche and develop AI systems that are culturally relevant to address our specific challenges. We have an opportunity to show the world what a unique Kiwi approach to AI looks like.

Of course, pioneering new technologies is never a smooth process. To create something meaningful and worthwhile, we will venture into uncharted territory. Missteps, setbacks and mistakes are inevitable in this journey. What's important is that we learn from those mistakes, and never lose sight of our values and our responsibilities to society.

The future is uncertain, but with the right approach, it is bright. By embracing AI with wisdom, care, and proactive risk management, we can create a better tomorrow for all. The AI revolution is here - it's up to us to make the most of it.

References

[1] https://www.fao.org/fileadmin/templates/wsfs/docs/Issues_papers/HLEF2050_Global_Agriculture.pdf

[2] https://www.nature.com/articles/nature16961